User research often takes time. How do you make it work in startups?

Three tips for speeding up user research in small organizations

Photo by Pexels LATAM: https://www.pexels.com/photo/men-running-down-a-countryside-road-in-a-marathon-17979492/

One of the most complex challenges I had when I moved to designing for startups was adapting user research to the fast-paced environment.

User research is often crucial here: when you only have limited resources, you must create products your users want or need.

But getting access to users, not to mention testing and doing analysis can be a big challenge, especially with the amount of time you’re given. It can feel like the team is sprinting down a path as fast as possible, so you don’t get weeks to get user research done; you have days.

So, how can you make user research work in those environments? I figured out three methods to speed up the process through Data-Informed Design.

As it turns out, it often starts with ‘avoiding the spreadsheet.’

Avoid the (text) spreadsheet and focus on the 10,000 ft view

You may have been taught to compile your user testing results into a giant spreadsheet: I know I was.

This massive spreadsheet of data details each user’s response to questions and actions, allowing you to cross-reference user actions and eventually form a report.

The problem is this won’t work for many companies, let alone startups. This is for two reasons: nobody can read the final result, and it takes too long.

Think about it: How long does it take you to dig through a spreadsheet, going back and forth, to compile all that research? It’s often either a ton of late nights and weekends or several weeks.

Moreover, you often do not need that level of detail in many cases. Part of learning about Data-Informed Design was learning how other team members think. In many cases, from C-level executives to Product Managers, your team is looking for the 10,000-foot view.

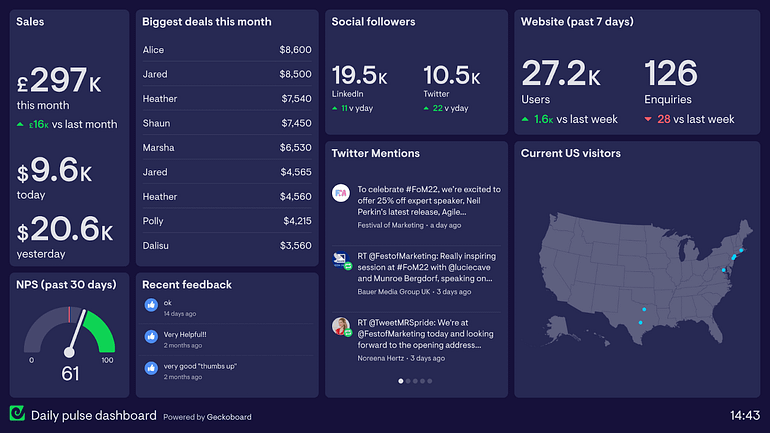

Simply put, they’re looking for problem areas and understanding where to invest resources, time, and strategies. This is why many tools, such as Product Dashboards, focus on that high-level view that helps them understand where they can take action.

As a result, their list of priorities is as follows:

Where are the problem areas of a design/workflow?

What should we/can we do about it?

Why are we running into these issues?

If you’re working at a larger organization, the “Why” matters a lot more, as it’s a way to justify the actions you’ve taken or used the resources that you did (i.e., Cover Your A*s). As a result, the details of whatever you report often sit on a shelf until it’s time to do a financial audit.

Spelling out the “Why” matters less with smaller companies and startups. When the main issue is shipping a product while ensuring sufficient user demand, the first two bullet points are a higher priority. It’s often good to capture the “Why” internally, but you don’t need to spend extra effort to present all the details to the rest of your team.

When you realize that, you can realize that you can ditch the large spreadsheet and use a couple of alternatives. Here are three of my favorites that I use regularly.

The rainbow spreadsheet: making user research visible with color

By and large, this method has replaced any giant research spreadsheets that people used to make.

Created by Tomer Sharon, Co-founder and CEO of anywell, this is a quick way to invite your stakeholders to collaborate and provide helpful usability testing feedback.

The idea is simple: assign participants a specific column (and color) and create rows of user observations. Then, during user testing, you (or your notetakers) tag any observations with a sticky note of that color.

If there’s a new observation not on the list, then you or your notetaker will create another row with that issue.

If multiple people run into the same issue, you’ll have observations with multiple stickies (of different colors) next to them. This way, you can quickly see the most common usability issues (or responses) that most people encounter.

I’ve talked about using this in another article, but I just wanted to mention that this often allows you to share high-level summaries within a day or two with your team.

However, sometimes, it’s not suitable for tracking details at a page level or highlighting specific issues with workflow. This is where we can use another process.

Sticky notes on pages help show problematic areas in a workflow

One of the more common user research questions stakeholders may want to answer is, “Where are users running into issues?” If that’s the case, one of the simplest ways to do that (and figure out the answer) is to do the page-level notetaking.

In this process, you would start by taking screenshots of each design page and putting them into a document (usually a FigJam document). You then tag participants with a different color sticky note and have your notetakers put their notes next to individual pages throughout the process.

What works best, I’ve found, is to create a template for each user test and then compile them in the end. That way, each debrief focuses specifically on a user, and then later debriefs can focus more on common trends.

While this takes more time to compile than the first method, some things can immediately stick out.

For example, it’s usually immediately apparent which pages users focus on most. If one page has 60 sticky notes, while the rest have 10, users found that page the most problematic (or just had a lot to say about that page). These are high-level insights that your team will probably want to know.

After that, you’ll want to dig a bit deeper into common responses across participants (the yellow box above), forming the basis of your findings.

You can now consider my third tip, which is controversial.

Use ChatGPT for Inter-rater reliability once you have done your research

I’ll say this now: ChatGPT cannot replace doing your user research analysis.

Trying to do so will quickly get you into hot water for two key reasons:

It can’t give enough details for actionable next steps

One of the significant questions team members have, once you finish analysis, is “What do we do next?” Suppose you don’t have enough understanding or details about your user research to answer that. In that case, you might not have just invalidated your user research efforts; you might have endangered budgets for future testing.

However, one thing that ChatGPT can offer is the ability to double-check your work. This relates to an academic term called Inter-rater reliability: this is something that many researchers use to ensure that they draw the same conclusions or value.

You cannot trust that ChatGPT will not hallucinate or make mistakes, but at the same time, you might want to see if there is anything you have missed (or want to bring up) through their conclusions.

To do this essentially involves three steps:

Exporting your notes into a .csv format (i.e., that giant spreadsheet), potentially using NotionAI

Trimming the spreadsheet to fit the “token limit” of ChatGPT (i.e., preventing it from being too long)

Choosing the right prompt to help break down the findings into a reasonable format.

When you do that, you can get a list of things that ChatGPT finds (probably inaccurate) that you can use to compare things like this.

Looking over the complete list of research findings, some things are wrong. In addition, some of this feedback is so generic that it’s hard to see what actions you could take with it.

However, at the same time, I could see some insights that I missed, such as the “naming conventions” piece. As a result, I can double-check my research and generate a preliminary user findings report more quickly.

This allows me to finish user research more quickly, which is increasingly becoming a valuable skill to have.

Providing user research feedback quickly is vital for startups

User research analysis can be tedious, but it’s a necessary step to uncover specific user insights that drive your design forward.

I’ve had a lot of miserable experiences doing so in the past. Whether it’s being trapped in a closet-sized room that quickly reached 90 degrees or working till 10 PM to get all the research coded, I know the pain of compiling all the details.

But things have come a long way since giant spreadsheets, and more importantly, I have learned how to avoid these time-consuming efforts as a way of necessity.

Startups and small organizations can’t wait two weeks for analysis; they often need it in a few days to drive action.

These tips, which I’ve learned in my Data-Informed Design journey, may help you. So next time you sigh, thinking about crunching a whole bunch of interview data, consider these steps to see if you can speed up the process.

Kai Wong is a Senior Product Designer, Data-Informed Design Author, and Top Design Writer on Medium. His new free book, The Resilient UX Professional, provides real-world advice to get your first UX job and advance your UX career.