Understanding metrics of interest

The first step towards utilizing quantitative data comes way before you even take a look at a dataset. Simply put, what metric does your team care about most?

I learned to care about this when I started my first Data Science assignment. I downloaded the dataset, set up a Jupyter Notebook, and then froze for a moment as I read over the homework assignment.

Once I opened up and wrangled the data into a usable format, I realized something: if the prompt hadn’t told me exactly what I needed to do and look at, I would have gotten lost staring at the data.

There were dozens and dozens of variables, not to mention thousands of rows of data: if I had to try and pick and choose what seemed to be most interesting to pay attention to at this stage, I would have been clueless.

And this was only exacerbated when I learned how Data Science often has to deal with real-world problems.

The fuzziness of real-world problems

One of the lessons that you learn, going from coursework to industry, is that you’re sometimes asked to translate problems into terms that you understand.

One of my favorite quotes about Data Science is this:

“A Product Manager or other stakeholder will not come up to you and ask you to create a supervised Machine Learning algorithm with 80% accuracy…. What they will do is tell you about some data and what problem they keep seeing.”

When working with a team, you often have to learn how to translate a business use case into a Data Science model (or other models) that you understand.

This fuzziness is not due to incompetence: your stakeholders know how to define things in terms of what they know. It's usually up to you to translate that into words that you understand, and vice versa.

So how can you address both of these problems? Both the fact that you’re not going to get a lot of guidance in the real world and the fact that you might get overwhelmed with data later on?

The key is getting your stakeholders to agree on metrics you’re interested in looking at.

Understanding metrics of interest

Metrics are things that you might be tempted to gloss over when you're in a project meeting with your team. Hearing project managers talking about specific KPIs might not be something that you'd typically expect to be that useful for your work life.

However, it serves as a way to translate a use case between your expertise and your stakeholders. To elaborate, let’s go back to the question that I asked before:

How would you determine if a project was successful six months after you launched it?

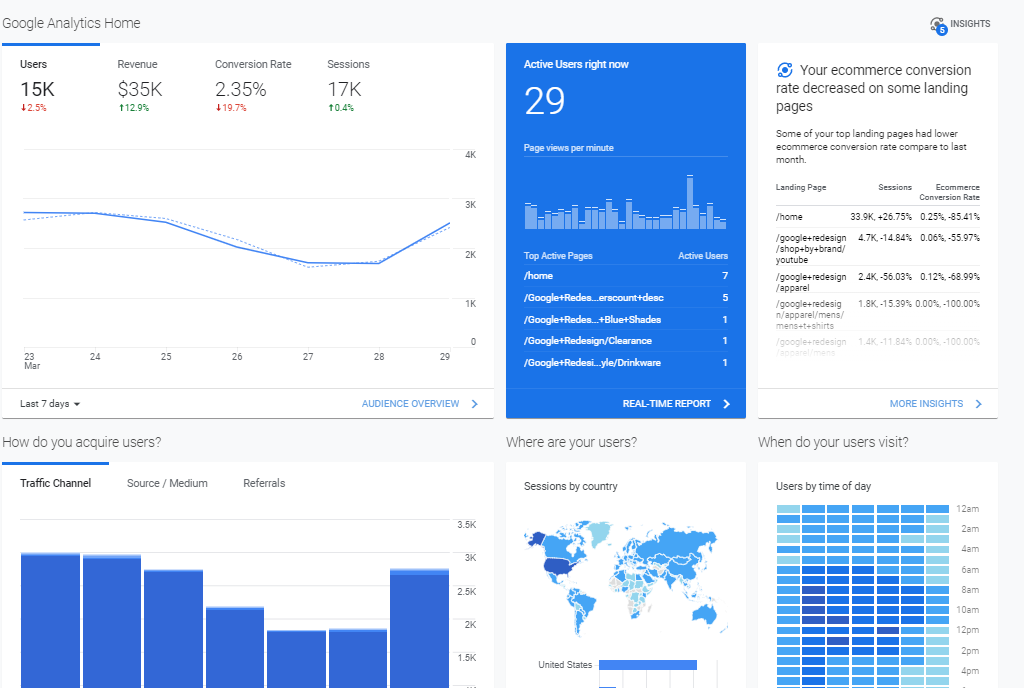

One of the most popular tools for this, in my case, would be something like Google Analytics.

But one only needs to open up the program to see what the problem is.

Google Analytics does a good job making the home screen very simple and easy to read, but it can’t answer one of the most important questions: which metrics matter most to you?

Is a project successful if your type of audience changed?

Is a project successful if the overall web traffic changed?

These are questions that you need to answer for yourself before looking at an analytics tool: no analytics program will help you without a clear understanding of what matters.

And you might call yourself just as lost when it comes to trying to figure something like that out.

But if you’re able to figure out what metrics should be associated with the project ahead of time, you can not only save yourself a lot of trouble in testing but also save others (like your data team) some time as well.

Here’s how to make the best use of this.

Define your goal in terms of metrics

Whenever you start a project with your team, you may be among the first members to take action, especially if you’re a UX professional.

This action can take the form of conducting UX research, either with your stakeholders or your users. This research tends to be your introduction to either the current workflow, your stakeholders’ goals, or just knowing more about the project.

Part of this process is deciding what research questions to ask, what data to collect, and how to analyze and present that data to your stakeholders multiple times as you iterate your designs to address your project goal.

A lot of the time, this tends to be focused on the qualitative side. It’s not that surprising, given that interviews tend to be among the most popular and common UX Research methods. But even with things like surveys or A/B testing, many of my experiences have been light on quantitative analysis.

But one of the first lessons I learned from Data Science was that the quantitative metrics are useful for one main reason: comparison.

Quantitative metrics are a way of answering the following questions:

Where do you want to spend your time and efforts making an impact?

What do you believe is good for your users?

If you only define your goals in terms of Qualitative aspects, such as “I want to make the website more engaging”, it can be hard to define exactly how it can be measured. However, adding a quantitative layer to your goals allows you to do multiple things.

If we have previous values for the metric, it allows us to have a point of comparison: being able to compare metrics from one year ago to current values will allow you to see if you’re trending in the right direction.

If not, the ability to capture a snapshot of our current performance can allow us to how we compare to thing such as the industry average.

According to Edward Tufte, data visualization pioneer, at the heart of quantitative metrics lies one question: “Compared to what?”

Having a goal that seeks to either utilize quantitative metrics, or combines qualitative and quantitative metrics, will allow you to capture relevant and measurable data during your data collection. This, in turn, can be quantified and organized into a dataset for data analysis.

And one of the side-benefits of that is to keep your stakeholders focused on your goal.

Maintaining a project focus

When you first start on a project, the goal may seem clearly defined enough to fit on a single page with all of your stakeholders signing off on it.

But what tends to happen as you move further along in the project is that the goal can fade into the background. It may seem from some of your later meetings that the project’s purpose is to determine what search results screen is best.

But one of the things that I learned from Data Science was the importance of these metrics: simply put, they're a way to keep everyone focused on the goal through an easy-to-understand and straightforward idea.

Imagine your stated initial goal was to increase the overall revenue for your website.

Well, there are many ways to do that.

Are you aiming to increase the number of visitors to the website?

Get existing visitors to spend more time/money there?

Reduce costs necessary for some of the functions?

Each one of those is a slightly different goal, and as a result, various metrics may be the focus of the project. But it is not immediately clear if the goal is relatively general.

If you defined your goal simply as “Trying to increase revenue,” various team members might have differing thoughts on what to do, which leads to issues. For example, if the Project Manager doesn’t believe that we cannot gain any more revenue from existing users, wouldn’t they be questioning why you would want to interview those users?

Some of you may be feeling like this is jumping too far ahead, and that's right. You likely won't know what your metric of interest is at the start of the project, except in rare circumstances. This is something that tends to develop throughout the project.

However, by continually focusing your attention on more than just the current user research topic, you can move towards a metric that we’ll be able to judge success or failure on at a later date.

For example, after doing user research about the current workflow, you might identify several major pain points guiding users in the wrong direction, causing them to abandon the website. We might decide that our project goal is to reduce the abandonment rate because of these constant and significant issues. Getting the team to focus on that specific metric of interest will help them define the project by that goal, reducing the likelihood of conversations getting lost in the details later on.

So how can you do this? By asking four specific questions.

The dimensions of data

So how do you figure out which metric should be your focus? Well, according to the Designing with Data, this is where you should ask the following four questions:

Why are you collecting data? — Are you collecting behavioral or attitudinal data?

When are you collecting data — Are you organizing a longitudinal study or a snapshot in time?

How are you collecting data — Are you using quantitative or qualitative methods?

How much data are you collecting — How many users are you seeking out?

These questions may be familiar if you’ve done user research before, but if you haven’t, these questions are used to define what’s called the dimensions of data.

Answering these questions is a valuable tool to determine what type of experiment you need to run, but it also helps define the research question we’re trying to answer.

You won't necessarily be able to figure out what metric of interest from these, but these types of questions (and thinking) are crucial to start the team thinking about how you will define the types of metrics you will want.

A metric of interest is your business's focus, determining factors as to whether a design is a success or failure. These are the things that directly relate to the overall project goal, but unless you’re a project manager or someone else, it’s likely not your call to figure this out. Instead, you want to figure out the metrics that will probably be the focus of your efforts.

For example, imagine that you're re-designing a current system that doesn't work too well. During the project discovery, if you can interview stakeholders or existing users, here's how you might try to answer these questions.

Why are you collecting data? We don’t have a clear enough picture of what our users are doing or where they’re running into problems. We want to understand what users are doing and where they’re getting stuck in the process, along with if they’re able to complete the tasks or abandoning them outright.

When are you collecting data? We plan to gather multiple iterations of testing with various changes to the current process to get a re-design that provides a good User Experience.

How are you collecting data? We plan to primarily do user interviews to understand how the current process works and perhaps some A/B testing along the way. We may not know that much at this point.

How much data are you collecting? We plan to collect as much data as possible to obtain statistical significance.

If you were to give these answers, what metrics might you be interested in as a result?

As a result, you might choose some metrics like the following:

Task completion rate (how many people currently can complete the task)

Time on task (how long does it take to complete something)

Error rate (Do users click on the wrong thing and need to backtrack)?

Benchmark (Does the re-design work better than the old system)?

System Usability Score (questions about the usability of the website)

After this, you need to decide which metrics are of interest to your team. On your end, you want to choose metrics that you are measurable by user behavior, just to make sure that during the data collection process you can measure them.

Choosing metrics that align with your goal may sometimes be a difficult process. Sometimes, you may want to consider what are called proxy metrics: these are metrics that anticipate one of the key metrics if the key metric is hard to measure.

Coursera, for example, uses the proxy metric of test completion to anticipate one of their key metrics, credential completion. This is because it can take a long time (weeks to months) for a user to gain a credential, and they may not have the ability to wait that long.

Out of our list, it may be hard to measure benchmarks, because it may be that your user test only covers part of the website process. As a result, you would have to wait to complete multiple user tests covering the entire website before being able to generate that.

However, if that was the metric you cared most about, you could use the System Usability Score as a proxy metric to provide a baseline that might anticipate the Benchmark. After all, if the overall usability of the website goes up, then the re-design might be considered a success.

Being able to think about these metrics ahead of time has many benefits:

First of all, it clarifies the common goal with the rest of the team. If we all know that we’re trying to improve a specific metric, it becomes easier to question assumptions that we might be holding.

Secondly, it allows you to easily articulate the potential impact of design in service of those goals. By showcasing how the actions you take on the design side can influence this metric, you can easily show the business value of design decisions.

Lastly, it can sharpen your instincts as a designer. By comparing your intuition about the user’s behavior and needs with the evidence that data provides, you’ll be able to refine your knowledge based on actual empirical evidence.

But this metric by itself is not that helpful in guiding your design process: to make proper use of it requires the next step, we need to define hypotheses with our metrics.