The user research technique that can help you in a time crunch

How to avoid bad design decisions due to time pressure

I never thought I’d address one of my most dreaded phrases by trying to organize my user research findings. But that’s what happened when I took a proactive approach in organizing my user research notes.

Creating a high-level summary in my spreadsheet allowed me to not only quickly revisit user research and understand the context: it also allowed me to compare design alternatives across different design iterations. And that was a crucial factor in reconsidering design alternatives in a time crunch.

Avoiding poor design decisions due to time pressure

I was forced to examine other possible design alternatives for a feature when it turned out that our design solution wouldn’t work due to certain legal and organizational policies.

This was part of a large-scale re-design of a project: we interviewed over 25 subject matter experts (SMEs) and gone through 4 rounds of iterative user testing. In addition, I was in charge of compiling notes and data analysis.

But this policy issue had occurred just 1 month before our expected due date. As a result, there was huge pressure to quickly find a design solution, with the leading idea being to use what we had with the current version.

In the past, this is where I would hear a phrase that I dreaded if I offered any alternatives: “I think might be a great idea, but we just don’t have the time to revisit this right now.”

This would usually be accompanied by a promise to revisit this in the next version, which meant this would usually get lost in the weeds. However, because I had taken a proactive approach towards improving data quality with user research (due to a painful lesson I had learned earlier), it was fairly simple to research and examine design alternatives. And the first step of that process was creating a high-level summary of my user research findings on my spreadsheet.

Summarize to revisit user research easily.

We often skip a step when analyzing our user research: we go from a user spreadsheet that contains a lot of hard-to-read data to a Powerpoint presentation of key findings.

We may not take the time to summarize or condense the spreadsheet into something easy to read before jumping to the Powerpoint. But the more design iterations you have, the more helpful it can be to have the data formatted in a way that can allow for easy comparisons across iterations. Not to mention the ability to review user feedback in real-time: if everyone but one person had a response to a particular question, it’s easier to send a follow-up email immediately after an interview rather than six weeks later.

So how do you create a summary of your research findings in a spreadsheet? It usually starts by taking a facilitator’s guide, which is the list of questions you intend to ask your user, and marking those as categories in a spreadsheet.

But doing this is only the start to creating a high-quality dataset. The next thing we want to do is make sure that we can use the data in a way that’s easy to transform. To do that, we need to create usable metadata.

Understanding metadata

https://xkcd.com/1459/

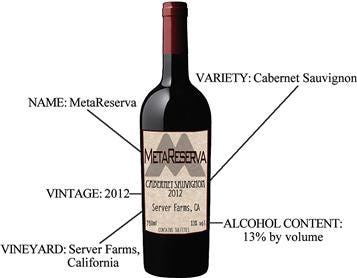

Metadata is one of those things that nobody really notices until it’s missing. According to Hunter Whitney, Metadata is defined as the data about data, such as the origin or context of the original data.

To put it another way, metadata is the way to know that you’re drinking wine from a certain year instead of a bottle of old vinegar.

Source: https://learning.oreilly.com/library/view/data-insights/9780123877932/

These additional pieces of data help to bring structure and definition to the dataset you’re looking at. But only if you actually use them.

We often collect metadata in the form of demographics: we ask about role, level of expertise, age, or many things at the start of a user interview. However, do you usually use it in your analysis?

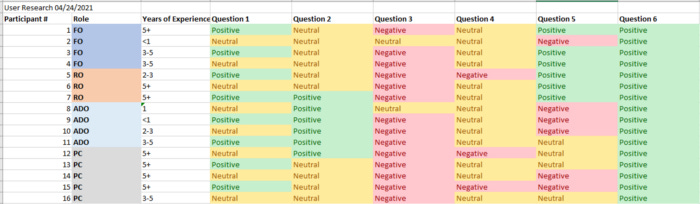

I did not really pay attention to these things much other than average them out in a slide (i.e., our users tended to have X amount of experience…). But by standardizing (and perhaps color-coding) your metadata, you might begin to see interesting patterns that emerge as a result.

In our case, one of our design alternatives was well-liked among users with mid to senior-level positions. Still, those in entry-level positions (i.e., those who would be doing the majority of the work) didn’t understand it. That’s something that would have taken a lot longer to understand if we didn’t have a clear way to break things down. But there’s another thing that can be a quick way to summarize research: tagging for sentiment analysis.

Real-time tagging of sentiment

https://www.kdnuggets.com/2018/03/5-things-sentiment-analysis-classification.html

One of the most useful things that I’ve been adding to my user research notes has been tags. These not only allow me to quantify certain types of interactions (such as task success or user wants) but allow me to quickly compile and quantify certain interactions that are common among different participants. But many of these tags are things that you need to do after interviews rather than in real-time.

However, one set of tags fits in well with taking notes in real-time: positive, neutral, and negative tags. Quantifying a user’s response in this way not only allows for an additional layer of data, but it’s also actually the basis for a certain type of analysis called sentiment analysis.

Seeing if a user thought well of a particular feature and then comparing those responses across all your users is a powerful tool. This is not necessarily that visually appealing (and you probably wouldn’t want to show it to your team), but it’s a rapid way to get a sense of whether or not something was perceived well. If the overall feedback is positive, it may be worth digging into the research to learn exactly what they said. And taking this approach for multiple rounds of user testing allowed me to figure out a better design alternative than what the team was proposing.

Address time pressure decisions by reference user research summaries

At this point, you may be thinking, what’s the point of putting in this extra effort to maintain such a clean dataset?

The answer to this is simple: it’s so you can always have your research in your back pocket. You often don’t have time to dig through (and compiling) research findings when there’s a lot of time pressure. But being able to quickly point to a research summary and show that users liked a design alternative is a way of convincing stakeholders to invest in one more round of user testing.

That’s fortunately what happened. We were able to take two design alternatives that had tested well in the past and quickly do another round of user testing to find a better solution to the sudden problem.

So if you’ve ever run into your team making bad design decisions due to a time crunch, consider taking the time to add a summary to your user research findings spreadsheet.

It might just save you more headaches than you realize.

Kai Wong is a UX Designer, Author, and Data Visualization advocate. His latest book, Data Persuasion, talks about learning Data Visualization from a Designer’s perspective and how UX can benefit Data Visualization.