How to test for navigation flaws if you have limited UX resources

How to adapt user testing to serve as a limited card sort

UX often wears many hats, and sometimes that includes Information Architecture.

One of my first big projects as a solo UX professional had bad Information Architecture. Like many of you might, I had limited IA experience, knowing just a little about designing headers, side navigation, and footers. But sometimes, the issues can be obvious and require changes and testing. So when both your experience (and your mentor) tell you that there are navigation issues, it is best to listen.

However, the sheer amount of UX work I had, combined with limited usability resources and a shortlist of approved organizational software, made it so that spending resources solely on card sorting or tree testing wasn’t a good idea.

User testing, with some sort of way to test navigation, was probably the best use of my resources. But this ultimately wasn’t a big deal: it’s not hard to roll design-related Information Architecture questions into a usability test.

Here’s how you do that.

The basics of Information Architecture for UX

“Put like with like. Decide what to retain vs. what to trash or donate. Then label and store items based on how you live.” — 97 Things every UX Practitioner should know

Information Architecture is a complex field that many professionals do great work in, and I’m in no way advocating UX take over their responsibilities.

Organizing and classifying digital stuff, so people can find and use it is vital to creating good IA and UX. But how do you figure out if people can find and use everything in the navigation? That’s the question UX should tackle.

You can sometimes stumble into IA issues when user testing. For example, users may ignore the navigation, or they consistently click on the wrong navigation item. However, this can sometimes be piecemeal, as we’re touching on common user scenarios rather than testing every navigation option. As a result, we might understand that users confuse “Recent Trends” with “Recent Rates,” but not whether the information hierarchy makes sense to our users.

https://tinyurl.com/ye27mwkd

So what can we do if understanding navigation (and IA issues) is a research aim? There are a couple of things that we can do during the research planning and user testing phases that can help.

Adapting your research planning and user testing to include IA

Early in the user research, one of the most important things to do is plan precisely how and what you’re going to test. There are three parts to this:

A draft prototype ready to test

A research plan comprising objectives, tasks, and scenarios

Participants

If we want to test for Information Architecture issues, we’re probably doing what’s called evaluative IA testing. We’re seeing if users can correctly find things in our organizational structure. If you were solely testing the IA, you would probably create enough scenarios to cover most navigation.

Unfortunately, this might not work for user testing.

We’re probably already testing a couple of scenarios solely on usability issues and functionality beyond just navigation. Adding a dozen more scenarios to the list can exhaust the user (not to mention the added workload of having designers mockup more sub-navigation screens).

So there are two things we need to do to do to address IA issues with your user research:

Make sure you capture navigation issues explicitly within your main testing scenarios

Create short, optional scenarios for gathering specific navigation feedback.

Here are some ways you can do that.

Look for Search vs. Navigation UX KPIs and collect them during testing

Google Analytics can become a handy tool in understanding a snapshot of what users currently do with the system.

In Google Analytics, a specific UX KPI can be very useful to understand if navigation problems are specific to your work: Search vs. Navigation. You can see an overview of this if you go to the Behavior tab, followed by clicking All pages under Site Content, and then clicking on the Navigation Summary.

From here, if you select a specific page, you can see if people used a particular search page or the navigation to move forward.

However, it’s probably more helpful to track these during user testing instead: unless there’s a glaring issue, the KPIs likely won’t yield valuable data.

This metric keeps track of how many users use the Navigation instead of Search for your tasks, and this type of ratio can be beneficial in understanding if people can’t find what they’re looking for.

For example, if a common search keyword is “yoursitename user preferences page,” and 75% of people use Search, then that means that it’s hard for users to navigate to that page through the navigation menu.

Usually, most websites want the users to use Navigation instead of Search, but this can vary depending on the website. For example, Amazon wants you to use the Search function, as Navigating through menus to find a specific brand product is a lot more tedious. Using the actual metrics or the ones you gather during user testing can solve an issue that IA recommendations sometimes run into: the scope and impact of the problem (or the recommended solution) may not be that visible.

Stakeholders might not understand why changing the name of a sub-navigation item from “Research & Tools” to “Software for Researchers” is a big deal, but seeing the metrics might cause them to understand the impact. Of course, metrics can only tell half the story: it’s up to user research to gather enough insights, data, and findings to understand the navigation issues and what users might suggest as alternatives.

Explicitly state navigation issues note-takers should follow during testing:

Many note takers do a good job capturing some interesting navigation observations. However, tell them to capture things explicitly, like the navigation path users took (“Products → Hardware”) or what a user did in terms of navigation (“Hesitated a bit before clicking on Software.”)

If you believe IA to be a big issue with the site that you’re user testing, some things that we can ask about or emphasize include:

Search vs. Navigation KPIs (“Make a note of whether they use the search bar or navigation to complete a task.”)

Hesitation/confusion about navigation (“They hovered over two options before choosing one,”)

Comments/Suggestions about navigation (“This wasn’t where I expected it to be.”)

Etc.

However, there’s one more thing that you can do to get extra IA feedback during a user test: have users give direct feedback about the navigation.

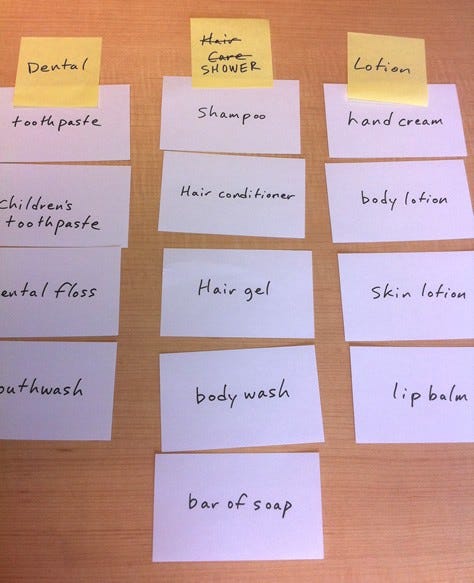

Optional card sorting scenarios and the Modified-Delphi card sort:

While your primary research scenarios will cover usability and Information Architecture, there may be specific navigation options you still want to ask about that are unrelated. Fortunately, Information Architecture scenarios are short (just a screen or two), as you’re just asking the user where to click if they want to accomplish something.

As a result, creating optional scenarios is pretty straightforward. But you don’t want to overwhelm your users. You will typically ask for these optional scenarios after you finish your primary scenarios, and the user might be tired at this point.

So you don’t want to overwhelm them with too many tasks. This is where you might want to use what’s called a Modified-Delphi Card Sort.

https://www.uxmatters.com/mt/archives/2011/06/comparing-user-research-methods-for-information-architecture.php

This card sort creates one persistent organizational structure across multiple participants by saving what each participant does. If the first participant changes the organizational structure, that change carries over to the second participant, who can change something else.

The easiest way to do this in most prototype software is to have a page with two sets of navigation trees. The first shows a read-only version and flat version of the current navigation, and the second has the navigation in an editable format (with some save/submit button to record the answers).

You should separate this sort of feedback and structure from the main usability tests to not bias or disrupt the type of results of your user test. This allows you to choose only a few optional scenarios to test with a user. So, for example, one participant may get an optional scenario regarding Header 1 and 2, while another may get a scenario involving Header 2 and 3.

You want enough overlap between scenarios so that one person doesn’t just address one navigation option, but this can often be a way of collaborating with users to build a better navigational structure. We can then assemble a rough organizational structure that multiple participants have tested, along with our usability test.

Reminder: look at navigation after the user completes tasks

Lastly, it would be best to ensure that the IA task is one of the last things (and possibly an optional thing) to test for.

User’s first impressions of the navigation are helpful, but sometimes it’s more important to get an in-depth feeling of how they feel about it after completing several tasks. Testing navigation first not only makes it much harder to give their impressions: it can also bias the way users approach tasks later on.

Therefore, it’s most helpful only to ask this at the end, after the user has a stronger sense of navigating and using the website.

And applying this can yield many insights.

UX practitioners wear many hats.

You might have an Information Architect in many ideal organizations, which means you don’t need to go through this trouble. But sometimes, UX practitioners have to wear the IA hat, like they might with UX Research, UX Strategy, and Content strategy. This can be especially true if you’re one of a few UX people in the organization.

In that case, spending extra time during user testing to gather some feedback about the navigation can be incredibly useful in addressing many usability problems.

In that case, thinking about navigation and information architecture during user testing can yield systematic observations about where these problems lie and how to fix them. And this will result in better UX, even if you don’t do Information Architecture as a job responsibility.

Kai Wong is a UX Specialist, Author, and Data Visualization advocate. His latest book, Data Persuasion, talks about learning Data Visualization from a Designer’s perspective and how UX can benefit Data Visualization.